Projects

Gaussian Processes for Social Scientists: A powerful tool for addressing model-dependency and uncertainty

Doeun Kim, Chad Hazlett, Soonhong Cho

The Gaussian Process (GP) is a modeling tool that elegantly combines a highly flexible but easy to understand approach to non-linear regression with rigorous handling of uncertainty estimation. Unlike conventional approaches, it produces uncertainty estimates that reflect uncertainty over the true model and which consequently grow wider in areas with less data.

We offer an accessible explanation of GPs for social scientists and an estimation approach (with software) that avoids most user-driven hyperparameter tuning. These approaches produce more appropriate confidence intervals for conditional or pointwise estimates under poor overlap, and produce reliable inferences for approaches involving prediction at or beyond the edge of training data, such as regression discontinuity designs.

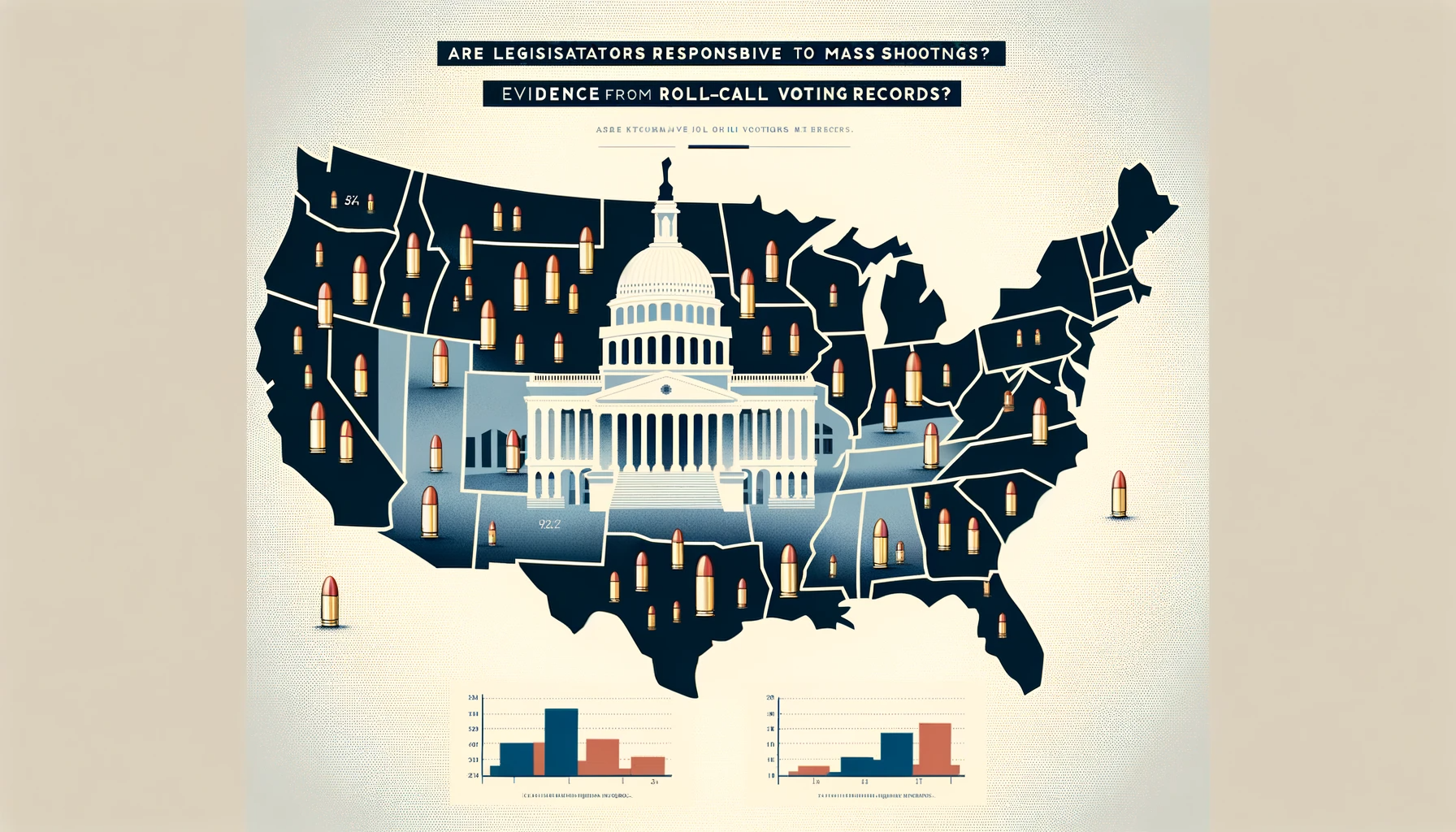

Are legislators responsive to mass shootings? Evidence from roll-call voting records

Jack Kappelman, Haotian Chen, Daniel Thompson

The U.S. leads the world in the number of violent mass shootings that occur each year, and yet policy making on gun violence prevention efforts remains stagnant and polarized along party lines. Are legislators responsive to mass shootings? To answer this, we aim to utilize modern methods of causal inference to investigate whether the occurrence of a mass shooting within or near a legislator's district affects their voting behavior on gun policy measures. We will pair a dataset on all 111 public mass shootings from 2000 to 2022 with an independently collected dataset of state legislators’ roll-call voting records on the over 15,000 gun-related bills introduced in state legislatures during this period. We seek to shed light on the manners by which the government responds – or fails to respond – to mass shootings, and how proximity to these events affects legislative decision-making, and how these decisions affect public health outcomes.

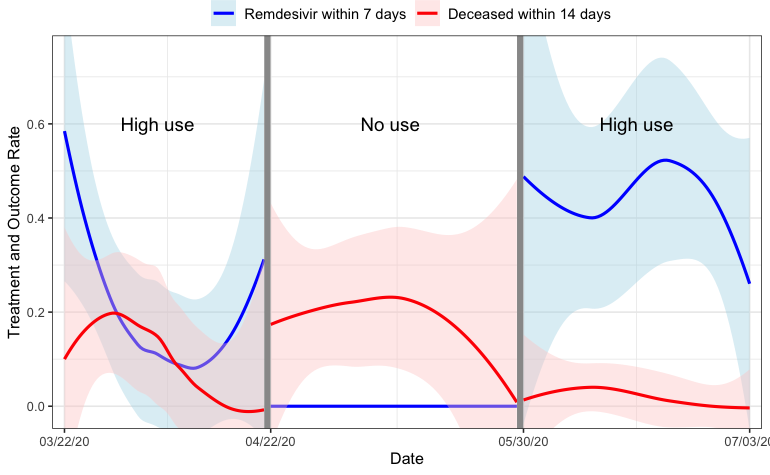

Safe learning outside of randomized trials: Application of the stability-controlled quasi-experiment to the effects of three COVID-19 therapies

Onyebuchi Arah, Chad Hazlett, David Ami Wulf, Brian L. Hill, Jeffrey N. Chiang, David Goodman-Meza, Bogdan Pasaniuc, Kristine M. Erlandson, Brian T. Montague

What could have or should have been learned about the effects of experimental COVID therapies from their uses outside of randomized trials? Such questions are fraught because patients and physicians need to make decisions with the available information, but such observational evidence is vulnerable to misleading biases. We consider the “stability controlled quasi-experiment,” which shows valid treatment effect estimates for any given assumption regarding the “baseline trend” (how much the average outcome, absent treatment, would have shifted on its own between successive cohorts). We investigate the effects of three therapies (remdesivir, dexamethasone, and hydroxychloroquine) using small samples from outside of randomized trials. We find that over the range of baseline trend assumptions we argue to be plausible, the corresponding range of causal estimates is wide but informative and consistent with the results of eventual randomized trials.

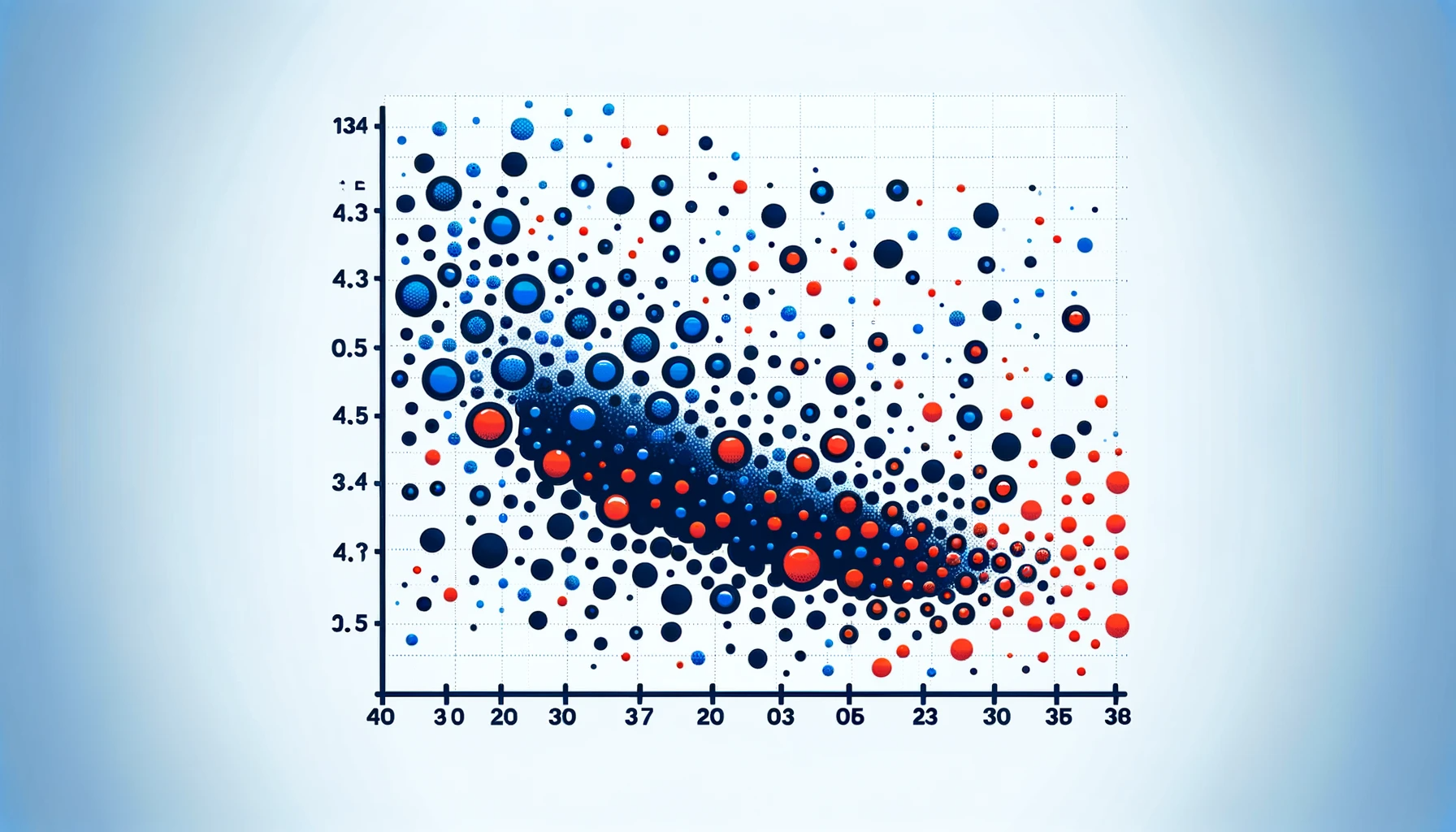

Demystifying the weights of regression: Heterogeneous effects, misspecification, and several longstanding, equivalent solutions

In observational and experimental settings, researchers have often used regression to estimate an average treatment effect (ATE) while adjusting for observables (X). Since at least Angrist (1998), we have known that the regression coefficient produces an awkwardly weighted average of strata-wise treatment effects not generally equal to the ATE. We demystify this weighting, which is best understood as merely a symptom of an OLS specification ill-equipped to handle heterogeneous effects. Several natural solutions—imputation/g-computation, interacting X with the treatment, and mean balancing—sidestep this problem and offer very simple alternatives to simply regressing Y on D and X. We provide new derivations of these weights, show the exact equivalence of imputation and interaction estimators, describe the underlying assumptions required for these alternatives to work, and illustrate the consequences of these findings for estimation in both observational and experimental designs.

Seeing like a district: Understanding what close-election designs for leader characteristics can and cannot tell us

Many studies in the social sciences use close elections to test what happens when certain types of politicians get elected, such as men vs. women. In this project, Chad Hazlett and Andrew Bertoli are working to clarify what can be learned from these designs and to outline methodological tools that can be employed in this context. The project has important implications for studying leader effects from close elections.

Link: Draft manuscript

The impact of a community college online winter term

Chad Hazlett, Gabrielle Stanco, Davis Vo

This research project examines the impact of a community college's online winter term on enrollment and student outcomes using a variety of causal inference approaches (e.g., instrumental variable in randomized encouragement, stability controlled quasi-experiment, and selection on observables).

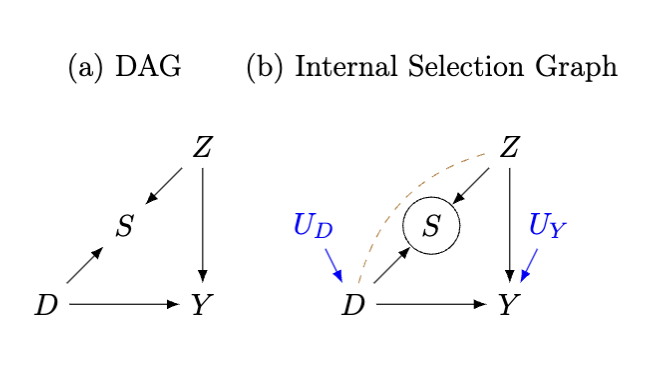

Revisiting sample selection as a threat to internal validity: A new analytical tool, lessons, and examples

Researchers often attempt to estimate the effect of a treatment on an outcome within a sample that has been drawn in some selective way from a larger population. Such selective sampling may not only change the population about which we make inferences, but can bias our estimate of the causal effect even for the units in the sample, thus threatening the "internal validity'' of the estimate. We propose "internal selection graphs", annotating standard graphs to show the impacts of selection. We then extend standard criteria (backdoor/adjustment) so that users can determine what effects are identifiable, and what to condition on.

Causal progress with imperfect placebo treatments and outcomes

Placebo treatments and outcomes can be useful when attempting to make causal claims from observational data. Existing approaches, however, rely on two strong assumptions: (i) "perfect placebos", meaning placebo treatments have precisely zero effect on the outcome and the real treatment has precisely zero effect on a placebo outcome; and (ii) "equiconfounding", meaning that the treatment-outcome relationship where one is a placebo suffers the same amount of confounding as does the real treatment-outcome relationship, on some scale. We consider how these assumptions can be relaxed, within linear models. While applicable in many settings, we note that this also offers a relaxation for the difference-in-difference framework, taking the pre-treatment outcome as a placebo outcome, but relaxing the parallel trends (equiconfounding) assumption.